Does Google Have A Racial Bias?

The Short Answer: No.Google is interested in searcher intent. Because of this, searches that are race-oriented, will show you what Google thinks you might be looking for, not just the words in your search.

Larry Page, Google’s CEO could have been speaking about his vision for Google’s search engine when he described the perfect search engine as “understanding exactly what you mean and giving you exactly what you want.”

Google’s wants to understand exactly what we mean when we perform a search. In other words, when I type in the term “apple,” am I referring to a fruit or the biggest electric goods company in the world? It’s Google’s job to figure that out.

In Google’s most recent updates, the search engine announced it was using an Algorithm called BERT in 100% of its searches. BERT helps Google understand the search intent for longer-tail keywords in the form of questions or phrases. This update reflects Google’s emphasis on understanding searcher intent - what is the intent behind the words.

Searcher Intent Results Vs. Defining Results

Let’s do a comparison between Google and something that would focus on ore defining results - a stock image website for example. When you search for “happy white women” on a stock photo website, it's job is to say, "Happy White Women? I know what that looks like. Here's all of the images I have of happy white women."

Now let's say you make the same search on Google. Google's Algorithm, Bert, is going to say "Happy White Women? I've had _______ many people make searches that include that phrase, and they've ended up to be looking for one of these things. Here's all those things!"

This is heavily affected by volume! Let's say "happy black women" is a much more often used term than "happy white women." If that's the case, the images, articles, and videos that show up for these two searches are going to be very different!

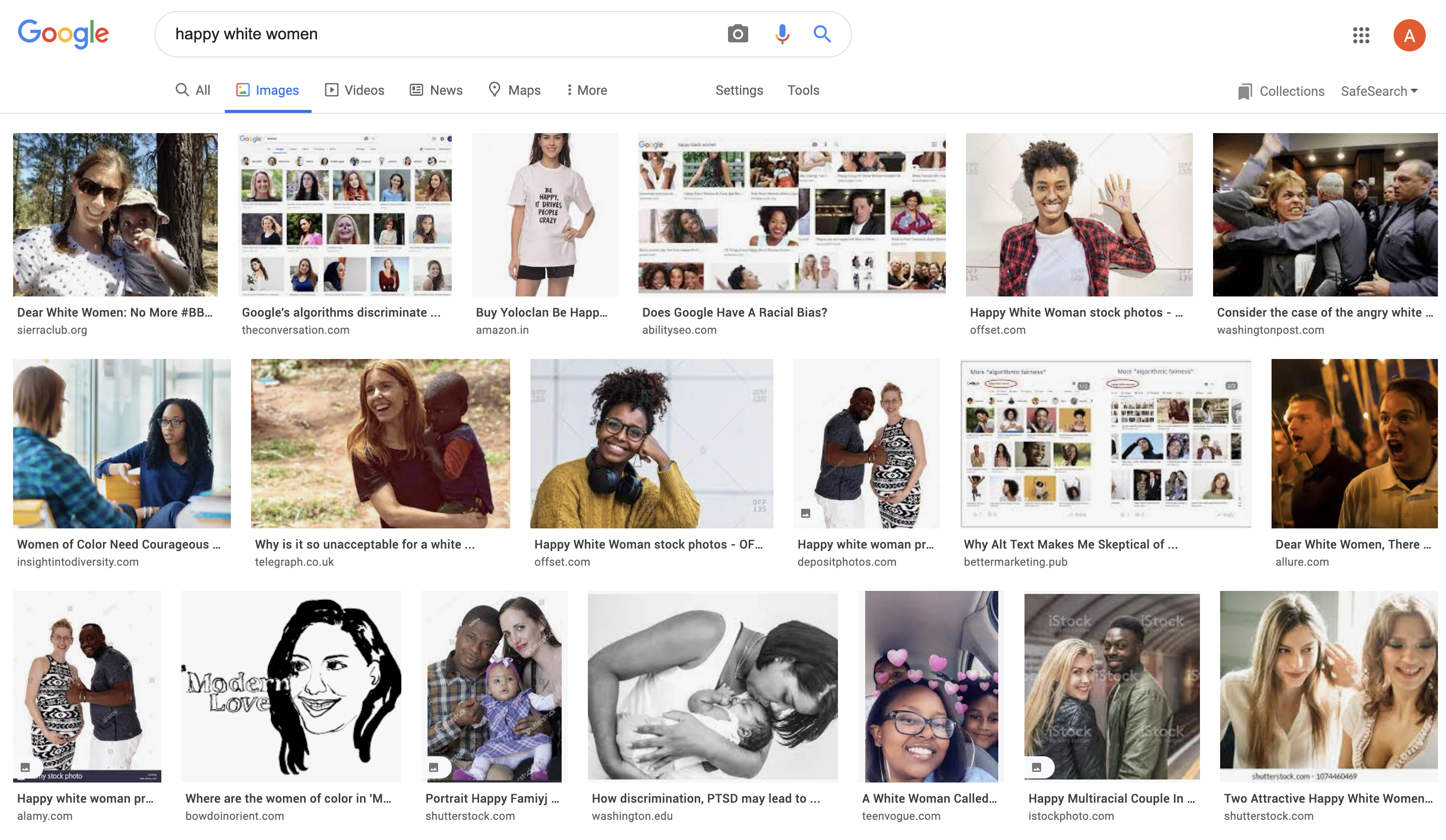

You can see these vast differences yourself with a practical exercise:

- Search for images of "happy black women”.

- Search for “happy Asian women”.

- Finally, search for “happy white women”. You’ll notice a big difference in the results!

The photos are not what you expected, but that doesn't mean the search is "wrong" or trying to influence you to think differently about race or gender. It's consistent with Google's algorithm for every search. It shows people what Google thinks they want, not what they actually typed.

In fact, my guess is that when you searched for "happy white women", you weren't really looking for pictures of "happy white women." You were looking for information about the controversy about Google searches for "Happy white women". The images you found were exactly what you were looking for: images related to the controversy about "happy white women". These were not images of "happy white women", rather images about "the happy white women controversy".

Bottom line: Google's agenda is to give its users what they want. That's why it shows results based on searcher intent.

Special thanks to Jon Gordon who contributed to the original version of this article.

Your Articles Need SkimCatchables

Skim-Catchables are bloggers' - now- not-so- secret weapon to make readers' jobs easier because they can easily skim down a page and find the answer to what they are looking for. Engaging titles and subtitles, gorgeous infographics, and functions like TL; DR (Too long; didn’t’ read) not only help readers, but should be part of any writer's tool kit!

Topic #Google Search,#searcher intent,#Google Index,#conversational search,#google analyticsAdam Singer helps growth-minded businesses turn marketing and operations into scalable systems that drive results. As the founder of Ability Growth Partners, Adam works with owners, managers, and marketing leaders to simplify workflows, improve visibility, and grow revenue—without the guesswork. Adam brings a rare blend of strategy, automation, and hands-on execution. He’s known for connecting the dots between digital ads, SEO, AI tools, and internal systems—transforming scattered efforts into a clear, scalable plan. With decades of web experience and credentials as a HubSpot Solutions Provider, Google Partner, and WordPress organizer, Adam has led award-winning campaigns and spoken on marketing and tech at events across the U.S., Israel, and Asia. Structure, strategy, and support that actually move the needle.

Leave us

a Comment!